First Hand Experience on the Path to Continuous Deployment for Docker Containers

Authors note: this is a summary of my session at DevOps Summit NY on June 8, 2017. For details on the session along with slides, visithttp://datagridsys.com/nomorescripts.

Being experienced startup folks we are not scared of bleeding edge tech. For instance, in 2005 we made the jump to virtual machines when most companies hadn't even heard of virtualization, in order to create our cloud platform, AppLogic. This time around we were already very familiar with containers, including many of the limitations, and believed they offered a lot of advantages in speed and cost so we decided to go with Docker running on AWS.

Our engineers have written a lot of apps including both interactive user apps and infrastructure level services and we're pretty adept at creating stateless systems where that's possible. Unfortunately, some of the third party software we needed to use, especially open source, wasn't written with stateless in mind.

For us combining Dev and Ops wasn't a choice, but a necessity. We couldn't afford another hire to run ops. Nonetheless we're fanatics for DevOps and automating everything we can in build, test and deploy. In our case the role of the DevOps guru fell on Pavel, one of our senior engineers. He's well versed in systems architecture as well as most of the systems that needed to be touched by the automation.

Introducing VCTR

The app we were bringing out is called Victor (VCTR for Vulnerabilities and Configuration Tracking and Reporting), a free online service that scans containers and VMs for upgrades that fix critical vulnerabilities. You can check out VCTR here.

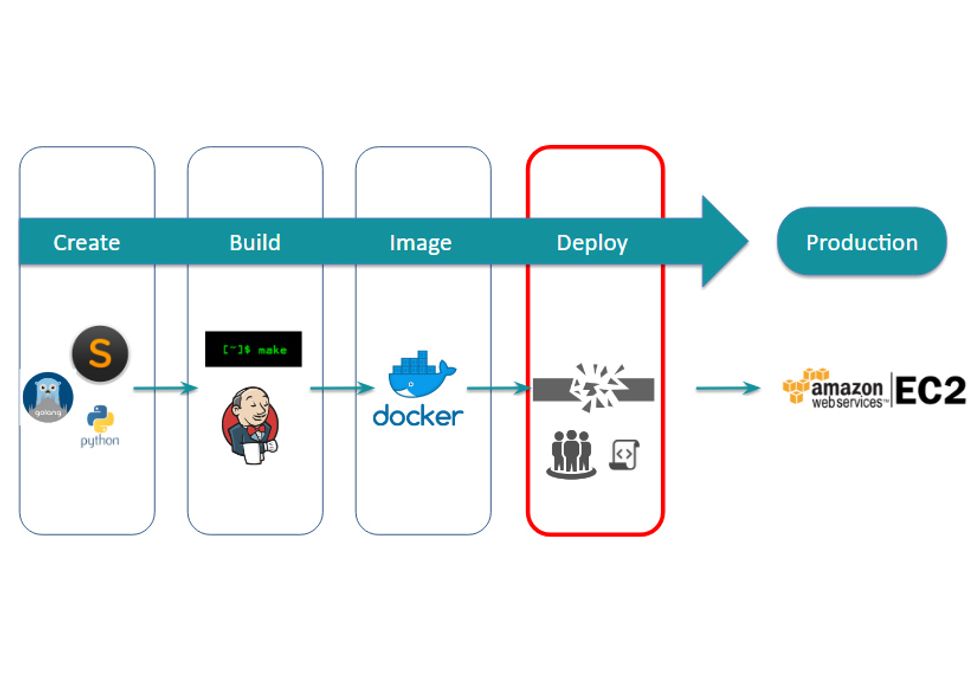

As we progressed through development some things worked exceptionally well. New tools including Slack made collaboration among a dispersed team very easy. Build and test tools including Jenkins worked as expected though they were a bit harder to set up than they should be. Docker, despite some minor issues, was more mature than we expected. As you probably have guessed, though, there's a "however" coming. In our case, that "however" was that deployments were still complicated and error prone.

- understand changes -> understand current app -> write script

- test script -> debug -> deploy

- fail -> debug again -> redeploy

- manual rollback...

- give up

The options were minimal. Either we continue to fail through this deployment until we got it right, or find another solution to the problem

Deployment Pain

Despite deployment issues hindering our velocity we needed to push forward with VCTR so we started user engagement while looking for solutions. We assumed we'd find a new tool or pattern in time, but things took an unexpected turn. While implementing VCTR we found other folks having similar deployment issues. Bringing up a new user with VCTR requires installing a small piece of code in the container or VM. That process, not surprisingly, happens in a deployment script and in most cases inserting that one change caused a failure in deployment. In one instance, while on a video call with a user, we watched as a five minute change to one script turned into a two hour debugging marathon involving more than half a dozen scripts. The only folks who didn't have errors after making the change had actually recognized deployment as a significant issue and built their own system for it. Systems, so significant in the amount of time they took to build that they were given names and teams to maintain them. However, those systems were tied to their company's specific architecture and tool chain.

The heart of the problem is that despite articles to the contrary, containers and microservices have dependencies. Some dependencies are simple. For instance, some software still have start order dependencies. Others require configuration differences based on the environment they're running in. Still other require logging in and executing commands after startup. Some dependencies you create yourself. As an example, changing an API requires updates to both the providing and calling services. You might require all updates to be backward compatible, but that's just moving the work to what may be an even more complicated arena. Lastly, what happens when something fails? How do you find out what failed? How do you get back to a functional state?

Despite mounting evidence we didn't initially recognize the pattern. One day over lunch, as the discussion turned from VCTR to deployment issues we realized we'd seen similar problems before when we first tackled cloud deployment. Furthermore, we knew we could solve this. We decided to build a continuous deployment system.

Lessons Learned

We obviously learned a ton about what not to do when setting up a new containerized application. Here's our conclusion of the good and bad of containers, microservices and DevOps:

The good

- Containers, though not perfect, have a rich enough ecosystem of tools to be used in production.

- Microservices architectures are great but don't try to make everything stateless.

- DevOps is the new norm, but you need to go beyond just assigning an engineer the title or merging the folks onto a single team. Ops must take on a new role defining use cases and Dev must embrace Ops.

- Get your tool chain in place from the start. We tried to hack our deployments and as such didn't see the problem for what it was until we were knee deep in it.

The bad

- Deployment remains hard because it's where software meets the real world.

- Deployment requires a system once you get past a few containers. If you don't adopt one, you're going to end up building one - whether you realize it or not.

- Scripts work fine for small apps that don't get updated often, but aren't up to the task for continuous deployment for apps that contain more than a few containers or microservices, especially those that are updated frequently.

The Result - Continuous Deployment System

We identified key challenge patterns that both ourselves and others faced during deployment and turned them into solutions. One important difference to the other deployment systems we had seen was that we made sure to build for varied environments and architectures. We didn't want this tool to just work for us or rather just the current pattern we were working in. We needed a true system to grow with us. Here are some of the main requirements of our Continuous Deployment system:

- Integrate with popular tool chain elements

- Recognize code and architecture updates

- Provide for identifying dependencies

- Provide for various operating environments

- Automatically deploy authorized updates to operating environments

- Automatically correct for common errors to return proper operation

- Provide meaningful logs and error messages

- Be operable from either UI, API or completely autonomously

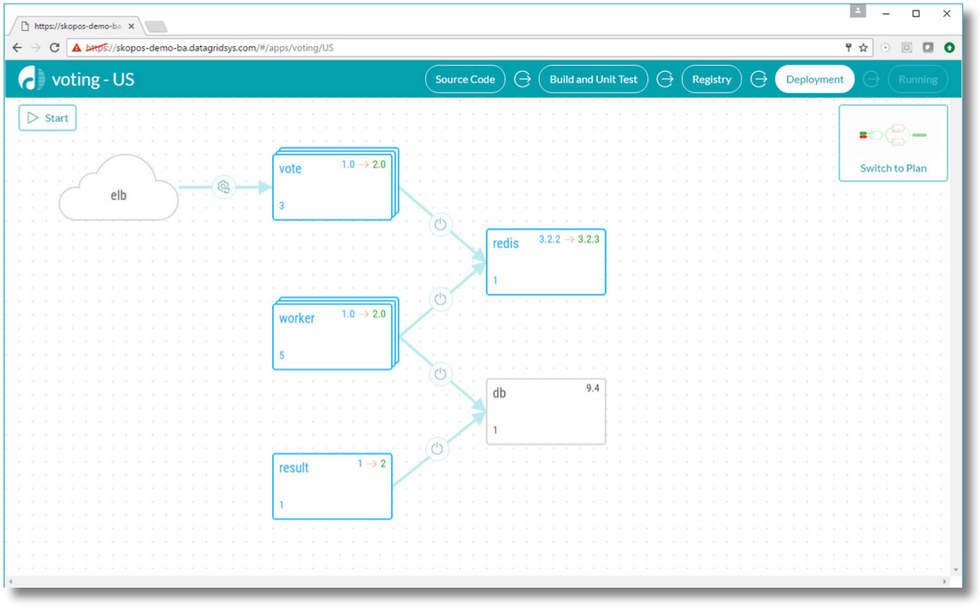

We built this deployment system and it's now deploying VCTR on a regular basis. It's also in production at InnoScale and being tested by a handful of DevOps teams who felt the same pain as we did. We're offering the system for free to teams for up to 15 containers.

To learn more about Skopos - our continuous deployment system visit http://datagridsys.com/skopos/.