Below is an article originally written by Josh Tobin, and published on July 8, 2021. Go to Gantry's company page on PowerToFly to see their open positions and learn more.

One of the biggest misconceptions I hear from laypeople about AI is the belief that machine learning models get smarter as they interact with the world.

My friend told me a story about a bug that cost his company millions of dollars and illustrates why this perception is wrong. When his boss asked him to investigate a spike in user churn, he realized that a change in a feature definition for recently registered users had broken his model. New users were leaving because his model had recommended random items to them for nearly a month.

The issue isn't just data bugs — the static machine learning models we learned to train in school are downright fragile in the real world. Your inventory forecasting model might break because COVID-19 changes shopping behavior. Your sales conversion model might break because a new marketing campaign succeeded. Your autonomous vehicle might even break because you don't have enough kangaroos in the training set. Static models degrade because real world data is almost never static — your training set isn't representative of the real world for long.

So, as hard as it is for us ML PhDs to admit, most of the work happens after you train the model. After grad school, as I spent more time with teams building ML-powered products, I realized that the best ones don't see their job as training static models. Instead, they aim to build a continual learning system, a piece of infrastructure that can adapt the model to a continuously evolving stream of data.

At Gantry, we want to help teams build ML-powered products that improve as they interact with the world, much like laypeople believe they do already. We think the key to doing so is to transition from training static models to building continual learning systems.

From static models to continual learning systems

In static ML, you are given a dataset and your job is to iterate on the learning algorithm until the model performs well. Continual ML adds dataset curation as an outer loop to the iteration process.

In static ML, you are given a dataset and your job is to iterate on the learning algorithm until the model performs well. Continual ML adds dataset curation as an outer loop to the iteration process.Continual learning systems adapt models to the evolving world by repeatedly retraining them on newly curated production data. For example, if you retrain your model every month on a sliding window of data, then you have built a simple continual learning system.

Your continual learning system only succeeds if the performance benefit from retraining outweighs the cost. So the more valuable your model, the more quickly your system needs to react to degradations in performance and the more carefully it needs to test the new model to make sure it truly performs better. The more expensive it is to retrain, the more intelligently your system should curate the next dataset using techniques like active learning.

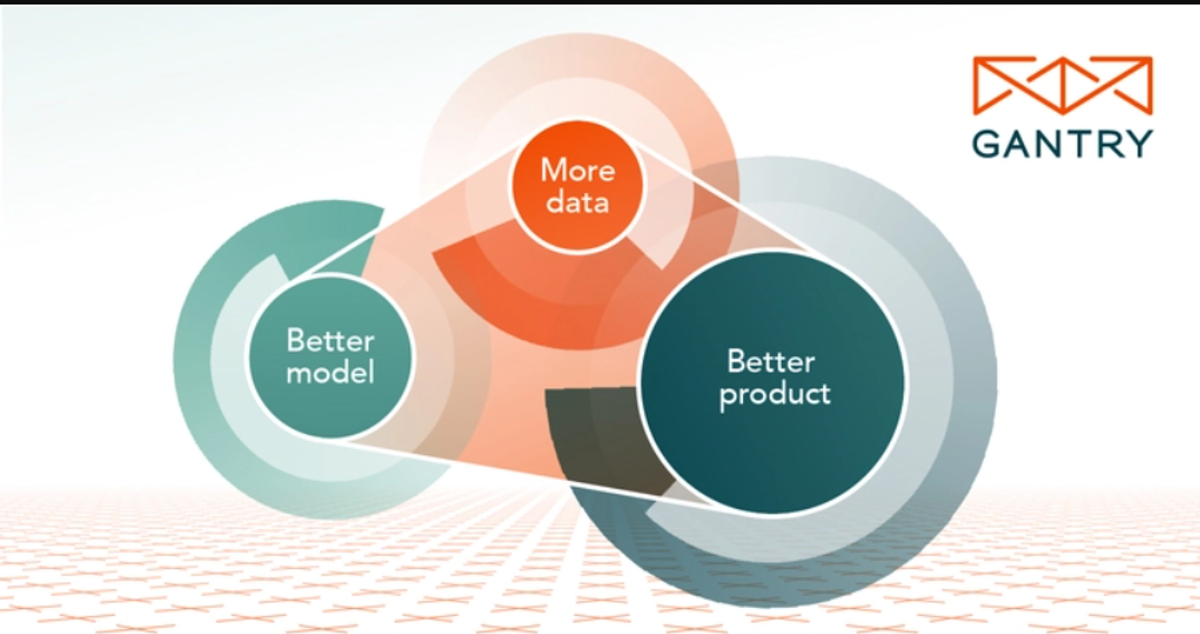

When it does succeed, a continual learning system can cause your product to improve as it interacts with the world. As you collect new data, you can trigger a data flywheel effect, where more data leads to a better model, which improves your product, allowing you to acquire more users and data, closing the flywheel.

Despite the benefits of continual learning systems, few teams have implemented them successfully. ML practitioners in the Full Stack Deep Learning alumni network cited the components of the continual learning system — post-deployment monitoring, maintenance, and retraining — as the most challenging aspects of the ML lifecycle.

So, why has this transition been difficult?

Continual learning has an evaluation problem

My friend's team reacted to their expensive data pipeline bug by building a suite of tools to evaluate the performance of their models more granularly. Catching the degradation in performance for new users quickly would have allowed them to mitigate it by retraining or rolling back the data pipeline. Similarly, for most of us, the challenge with continual learning isn't building the system, it's understanding model performance well enough to operate it.

To see why, let's view the continual learning system through the lens of control systems. Think of a control system as any machine, like a plane, that adapts to a constantly evolving environment to achieve its goal. The plane's pilot flies it by operating a control loop. As conditions change, the pilot adjusts the flight path with actuators (knobs, pedals, etc), observes the effect of the adjustment by reading instruments, and uses the observation to decide on the next adjustment.

We operate our continual learning systems in a similar way. We adjust the system when we ingest, label, or featurize data points, or when we train a new model or promote a different version to production.

Thanks to an explosion in MLOps tooling, in 2021 we can actuate the continual learning system entirely using open source libraries. We can ingest data using Apache Kafka, label it using LabelStudio, and featurize it using Feast. We can retrain the model using a ML library like PyTorch and an orchestrator like Airflow, and deploy it using a serving library like KFServing or Cortex.

At Gantry, we think what's missing are the instruments. Few ML teams today have visibility into model performance required to make decisions about when to retrain, what data to retrain on, and when a new model is ready to go into production. ML teams are operating the continual learning system by feel. Instead, we should be operating them by metrics.

Where do we go from here?

Understanding the performance of your model well enough to adjust your continual learning system is a challenging organizational, systems, and algorithms problem.

- Stakeholders have different priorities for the model. How can you align the team on what evaluation metrics to use?

- The raw data needed to compute the metrics are generated across your system, including the training infrastructure, serving infrastructure, application code, and other parts of the business. How can you build the data infrastructure necessary to view your metrics in one place, especially at scale?

- Ground truth feedback is delayed, noisy, and expensive. How should you approximate your metrics with limited labels?

At Gantry, we're building a tool called an evaluation store that serves as a single source of truth for your model performance metrics. You connect the evaluation store to your raw data sources (e.g., your production model) and define metrics and the data slices on which to compute them. The eval store computes the metrics for you and exposes them to downstream systems so you can use them to make operational decisions.